Claude Code Compaction: How Context Management Works

How Claude Code manages the 200K token context window — compaction prompts, auto-compact triggers, and file restoration

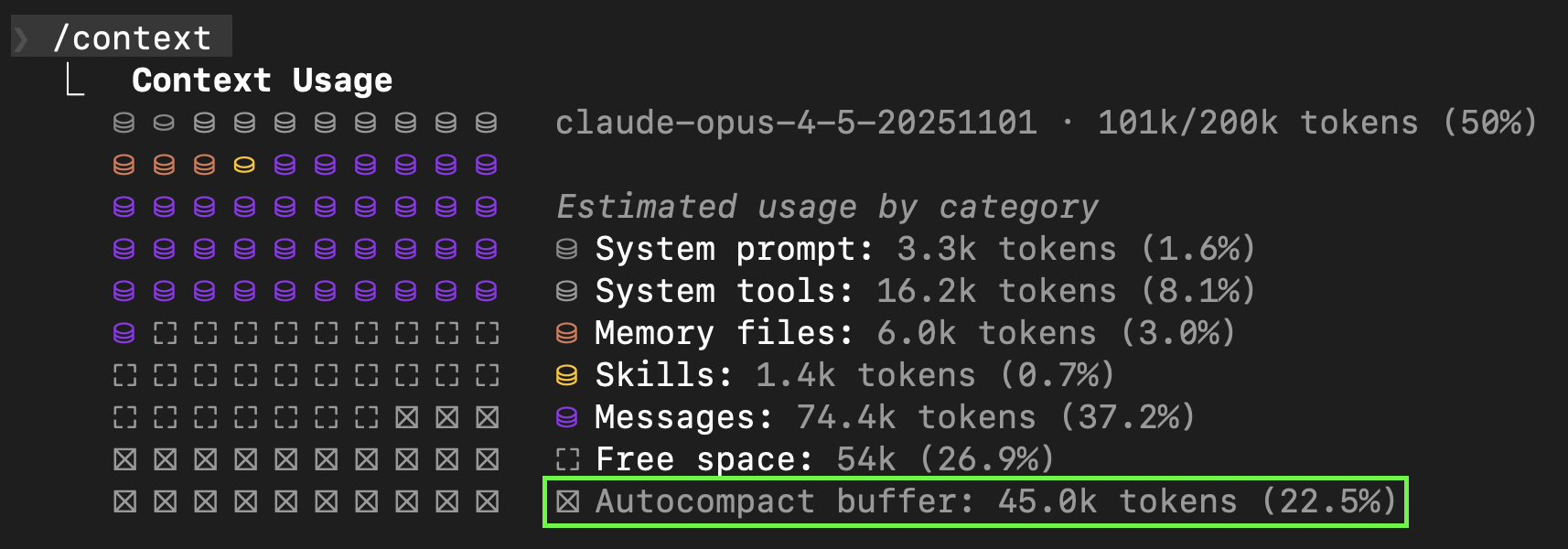

TL;DR: Claude Code uses a 9-section structured prompt to summarize conversations when context reaches ~78% capacity. It keeps the last 3 tool results in context (microcompaction), re-reads your 5 most recent files after summarizing, and tells Claude to continue without re-asking what you want. Consider running /compact at task boundaries rather than waiting for auto-compact.

Verbatim prompts and logic extracted from @anthropic-ai/claude-code v2.1.17.

The Context Window

The context window is everything Claude can see at once: system prompts, your messages, responses, tool outputs, file contents. Claude Code uses a 200K token context window by default.

What is Compaction?

Compaction is summarization plus context restoration. After summarizing, Claude Code re-reads your recent files, restores your task list, and tells Claude to pick up where it left off.

| Approach | What it does |

|---|---|

| Truncation | Cut old messages. Simple but lossy. |

| Summarization | Condense conversation into summary. Preserves meaning but loses detail. |

| Compaction | Summarize + restore recent files + preserve todos + inject continuation instructions. |

How It Works

Claude Code manages context through three user-facing mechanisms:

- Microcompaction — offloads large tool outputs to disk

- Auto-compaction — summarizes conversation when approaching context limit

- Manual

/compact— user-triggered summarization

1. Microcompaction

When tool outputs get large, Claude Code saves them to disk and keeps only a reference in context. The last 3 tool results stay in full; older ones become Tool result saved to: /path/to/file.

Applies to: Read, Bash, Grep, Glob, WebSearch, WebFetch, Edit, Write

Thresholds:

- Triggers when offloading would save at least 20K tokens

- Targets a 40K token budget for tool results

- Always keeps the 3 most recent results in full

2. Auto-Compaction

Claude Code reserves space for two things: model output tokens and a safety buffer for the compaction process.

Available = ContextWindow - OutputTokensReserved

Threshold = Available - 13000 (safety buffer)| Output Reserved | Available | Trigger Point | Autocompact Buffer |

|---|---|---|---|

| 32K (default) | 168K | ~155K (~78%) | 45K (22.5%) |

| 64K (max) | 136K | ~123K (~61%) | 77K (38.5%) |

By default, Claude Code reserves 32K for output tokens (CLAUDE_CODE_MAX_OUTPUT_TOKENS=32000), triggering autocompact at ~78%. The /context command shows this breakdown:

The “Autocompact buffer: 45K (22.5%)” is the reserved space (32K output + 13K safety). When “Free space” depletes to zero, autocompact triggers.

If you set CLAUDE_CODE_MAX_OUTPUT_TOKENS=64000 to use the full output capacity, the trigger drops to ~61%. The system waits until you have at least 10K tokens before considering compaction, then checks every 5K tokens or 3 tool calls.

{

minimumMessageTokensToInit: 10000, // Don't compact tiny conversations

minimumTokensBetweenUpdate: 5000, // Check every 5K tokens

toolCallsBetweenUpdates: 3 // Or every 3 tool calls

}3. The /compact Command

Trigger compaction manually, optionally with custom instructions to guide what gets preserved:

/compact # Use defaults

/compact Focus on the API changes # Custom focus

/compact Preserve the database schema decisionsFor persistent customization, add a section to your CLAUDE.md:

## Compact Instructions

When summarizing, focus on TypeScript code changes and

remember the mistakes made and how they were fixed.These instructions are appended to every compaction prompt.

The Compaction Prompt

When compaction triggers, Claude receives this instruction:

System: “You are a helpful AI assistant tasked with summarizing conversations.”

Instruction:

Your task is to create a detailed summary of the conversation so far,

paying close attention to the user's explicit requests and your

previous actions.

This summary should be thorough in capturing technical details, code

patterns, and architectural decisions that would be essential for

continuing development work without losing context.

Before providing your final summary, wrap your analysis in <analysis>

tags to organize your thoughts and ensure you've covered all necessary

points. In your analysis process:

1. Chronologically analyze each message and section of the conversation.

For each section thoroughly identify:

- The user's explicit requests and intents

- Your approach to addressing the user's requests

- Key decisions, technical concepts and code patterns

- Specific details like:

- file names

- full code snippets

- function signatures

- file edits

- Errors that you ran into and how you fixed them

- Pay special attention to specific user feedback that you received,

especially if the user told you to do something differently.

2. Double-check for technical accuracy and completeness, addressing

each required element thoroughly.

Your summary should include the following sections:

1. Primary Request and Intent: Capture all of the user's explicit

requests and intents in detail

2. Key Technical Concepts: List all important technical concepts,

technologies, and frameworks discussed.

3. Files and Code Sections: Enumerate specific files and code sections

examined, modified, or created. Pay special attention to the most

recent messages and include full code snippets where applicable and

include a summary of why this file read or edit is important.

4. Errors and fixes: List all errors that you ran into, and how you

fixed them. Pay special attention to specific user feedback that

you received, especially if the user told you to do something

differently.

5. Problem Solving: Document problems solved and any ongoing

troubleshooting efforts.

6. All user messages: List ALL user messages that are not tool results.

These are critical for understanding the users' feedback and

changing intent.

6. Pending Tasks: Outline any pending tasks that you have explicitly

been asked to work on.

7. Current Work: Describe in detail precisely what was being worked on

immediately before this summary request, paying special attention

to the most recent messages from both user and assistant. Include

file names and code snippets where applicable.

8. Optional Next Step: List the next step that you will take that is

related to the most recent work you were doing. IMPORTANT: ensure

that this step is DIRECTLY in line with the user's most recent

explicit requests, and the task you were working on immediately

before this summary request. If your last task was concluded, then

only list next steps if they are explicitly in line with the users

request. Do not start on tangential requests or really old requests

that were already completed without confirming with the user first.

If there is a next step, include direct quotes from the most recent

conversation showing exactly what task you were working on and where

you left off. This should be verbatim to ensure there's no drift in

task interpretation.(Note: section 6 is listed twice — this typo exists in the source)

The structured format ensures nothing critical gets lost. Each section acts as a checklist — user intent, errors, and current work all have dedicated slots.

Output Processing

The model outputs its response wrapped in XML tags. Before storage, these are transformed into plain text labels:

| Model Output | Stored As |

|---|---|

<analysis>...</analysis> | Analysis: + newline + content |

<summary>...</summary> | Summary: + newline + content |

Multiple consecutive newlines are collapsed to double newlines. This keeps the structure readable while stripping the XML syntax.

Post-Compaction Restoration

After summarizing, Claude Code rebuilds context with:

- Boundary marker — marks compaction point in transcript

- Summary message — the compressed conversation (hidden from UI)

- Recent files — up to 5 files, max 5K tokens each, sorted by last access

- Todo list — preserves your task state

- Plan file — if you were in plan mode

- Hook results — output from SessionStart hooks

The file restoration is the key insight: Claude automatically re-reads whatever you were just working on, so you don’t lose your place.

The Continuation Message

After compaction, the summary gets wrapped in this message:

This session is being continued from a previous conversation that ran out

of context. The summary below covers the earlier portion of the conversation.

[SUMMARY]

Please continue the conversation from where we left it off without asking

the user any further questions. Continue with the last task that you were

asked to work on.Environment Variables

Six variables control compaction behavior:

CLAUDE_CODE_MAX_OUTPUT_TOKENS — Controls how many tokens are reserved for model output. Default is 32K, max is 64K.

CLAUDE_CODE_MAX_OUTPUT_TOKENS=64000 claudeHigher values give the model more room to respond but trigger autocompact earlier (61% instead of 78%).

CLAUDE_AUTOCOMPACT_PCT_OVERRIDE — Directly override the autocompact trigger percentage (1-100).

CLAUDE_AUTOCOMPACT_PCT_OVERRIDE=90 claudeSets the threshold to 90% of available context (after output reservation). Useful if you want more room before compaction triggers.

DISABLE_AUTO_COMPACT — Disables auto-compaction only. You can still use /compact manually.

DISABLE_AUTO_COMPACT=1 claudeUse this if you want full control over when summarization happens.

DISABLE_COMPACT — Disables all compaction (both auto and manual /compact).

DISABLE_COMPACT=1 claudeUse this if you want to manage context entirely with /clear.

DISABLE_MICROCOMPACT — Disables microcompaction (tool result offloading). All tool results stay in context.

DISABLE_MICROCOMPACT=1 claudeUse this if you need to reference older tool outputs frequently.

CLAUDE_CODE_DISABLE_FEEDBACK_SURVEY — Disables the post-compaction feedback survey.

CLAUDE_CODE_DISABLE_FEEDBACK_SURVEY=1 claudeAfter compaction, there’s a 20% chance you’ll be asked how it went. This disables that prompt.

Aside: Background Task Summarization

Everything above covers your main conversation. This section describes how background agents manage their own context separately.

When you spawn background or remote agents (via the Task tool), they run in isolated context. Rather than the full 9-section prompt, Claude Code uses delta summarization to track their progress:

You are given a few messages from a conversation, as well as a summary

of the conversation so far. Your task is to summarize the new messages

based on the summary so far. Aim for 1-2 sentences at most, focusing on

the most important details.This incremental approach tracks progress without storing full context — each update builds on the previous summary rather than reprocessing everything. Your main conversation remains unaffected.

Summary

Claude Code’s compaction system:

- Microcompaction — keeps last 3 tool results, offloads rest to disk

- Auto-compaction — triggers at ~78% by default (32K output + 13K safety = 45K reserved)

- Full summarization — 9-section structured prompt preserves intent, errors, and current work

- File restoration — re-reads your 5 most recent files after summarizing

- Continuation message — tells Claude to resume without re-asking what you want

Background agents use separate delta summarization (1-2 sentence incremental updates).

Best Practices

Compact at task boundaries — Don’t wait for auto-compact. Run /compact when you finish a feature or fix a bug, while context is clean.

Clear between unrelated tasks — /clear resets context entirely. Better than polluting context with unrelated work.

Use subagents for exploration — Heavy exploration happens in separate context, keeping your main conversation clean.

Monitor with /context — See what’s consuming space. Disable unused MCP servers.

Further Reading

For those interested in the research foundations behind context management.

The “Lost in the Middle” Problem

Liu et al. (2024) discovered that LLMs exhibit a U-shaped performance curve: they perform best on information at the beginning and end of context, but struggle with information in the middle. At 32K tokens, 11 of 12 tested models dropped below 50% of their short-context performance on mid-document retrieval.

This explains why compaction works: by summarizing old content and placing it near the beginning, then restoring recent files at the end, Claude Code positions information where models naturally attend best.

Related work on attention patterns includes StreamingLLM, which found that initial tokens serve as “attention sinks” — receiving disproportionate attention even when not semantically important.

The Quadratic Cost of Attention

The transformer’s self-attention operation (Vaswani et al., 2017) computes QK^T, a matrix multiplication with O(n²) complexity where n is sequence length. For a 128K context window, this means 16 billion attention operations per layer.

| Context Length | Relative Compute Cost |

|---|---|

| 4K tokens | 1x |

| 32K tokens | 64x |

| 128K tokens | 1,024x |

Hardware-aware implementations like FlashAttention optimize memory access patterns but don’t change the fundamental scaling. This is why compression isn’t optional for long sessions — it’s economically necessary.

Prompt Compression Techniques

Research shows aggressive compression is viable:

LLMLingua (Microsoft, 2023) achieves up to 20x compression with only 1.5% performance loss on reasoning benchmarks. It uses a small model to identify and remove low-information tokens.

Gist Tokens (Stanford, 2023) compresses prompts into learned virtual tokens, achieving 26x compression with 40% compute reduction.

LLMLingua-2 reformulates compression as token classification using a BERT-sized encoder, running 3-6x faster than the original.

References

- @anthropic-ai/claude-code — npm tarball (deobfuscated with webcrack)

- Claude Code Best Practices — Anthropic docs

Compaction manages context within a session. For persisting context across sessions, see Session Memory.